top of page

实习经历

穿越现实世界的旅行。

A Collaborative Multimodal XR Physical Design Environment

Associated With

NYU Future Reality Lab

Presented at SIGGRAPH Asia 2024

Traditional design processes for physical prototypes can be time-consuming and costly due to iterations of prototyping, testing, and refinement.

Extended Reality (XR) technology with video passthrough has its unique benefits in alleviating the issues by providing instant visual feedback. We develop an XR system that provides annotations and enables interactive visual modifications by superimposing and aligning visual counterparts to physical objects.

With multimodal inputs, the system could help designers quickly experiment with and visualize a wide range of design options, keep track of design evolutions, and explore innovative solutions without the constraints of physical prototyping.

As a result, it could significantly speed up the iteration process with fewer physical modifications in each design iteration.

Keru Wang, Pincun Liu, Yushen Hu, Xiaoan Liu, Zhu Wang, and Ken Perlin. (2024). A Collaborative Multimodal XR Physical Design Environment. In SIGGRAPH Asia 2024 Extended Abstract (pp. 1-2).

Citation

A Survey on Audio-influenced Pseudo-Haptics: Methods, Applications, and Opportunities

Associated With

NYU Future Reality Lab and Music and Audio Research Laboratory

Submitted to CHI 2025

Haptic feedback plays an important role in interaction, but traditional haptic devices are often difficult to access due to their complexity and high cost.

A cost-effective alternative is to leverage auditory cues to generate pseudo-haptic sensations using the brain’s multisensory processing abilities. These auditory cues can enhance or modify actual haptic feedback, add detail to low-resolution haptic devices, or create convincing haptic illusions without physical contact.

Despite their potential, audio-influenced pseudo-haptics remain underexplored.

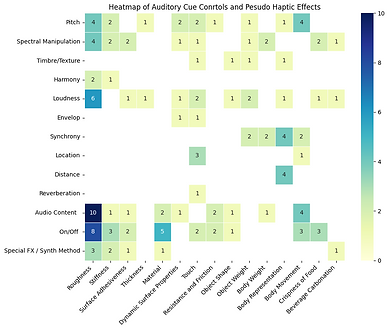

In this survey, we provide a comprehensive overview of existing research on audio-influenced pseudo-haptics, summarizing the mapping between different auditory cues and the pseudo-haptics that they elicit.

We review and categorize system implementations, study methods and applications, identify key challenges, and discuss future opportunities.

We aim to provide a road map in audio-influenced pseudo-haptics research and inspire further exploration in this field, fostering innovation and guiding the development of more immersive, functional, and accessible systems.

Keru Wang, Yi Wu, Pincun Liu, Zhu Wang, Agnieszka Roginska, Qi Sun, and Ken Perlin. (2024). A Survey on Audio-influenced Pseudo-Haptics: Methods, Applications, and Opportunities. In Proceedings of the CHI Conference on Human Factors in Computing Systems (pp. 1-25).

Citation

More projects are in progress, come back soon for updates!

bottom of page